He wanted to add a then unheard of 1 gigabyte of storage space so that users would never have to spend hours to sort and delete their mails. Ryan Tate’s book The 20% Doctrine says this was roughly five hundred times the storage space offered by competitors Hotmail.com and Yahoo! Mail.

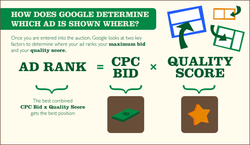

The question then was how to finance the expenses for this free extra storage space. Tate says Buchheit’s manager Marissa Mayer wanted him to charge users for the extra storage. But instead, Buchheit started looking at contextual advertisements like in the case of AdWords. AdWorks shows web searchers in Google, advertisements based on their search terms on the right hand side as well as on top of the search results page. For instance, if someone searched for ‘hotel’, advertisements for hotels would turn up.

Buchheit wondered if the same logic could be extended to email. What if ads were shown on the side of emails based on the contents of the mail, he thought. It was brilliant on the face of it, but equally sounded creepy, says Tate. Mayer expressed her misgivings bluntly. “People are going to think there are people here reading their emails and picking out the ads, and it’s going to be terrible,” she recalled thinking in a Stanford University podcast done later.

The podcast also recounted how Buchheit actually broke his promise to Mayer on not to work on combining advertisements with email. “I remember leaving, and when I walked out the door I stopped for a minute, and I remember I leaned back and I said, ‘So Paul, we agreed we are not exploring the whole ad thing now, right?’ And he was like, ‘Yup, right’.”

Tate says Buchheit broke his word almost immediately. “Over the next few hours, he hacked together a prototype of the ‘ad thing’, a system that would read your email and automatically find a related ad to display next to it.”

HE USED A PORN FILTER TO CREATE ADSENSE

Tate also gives the details about how Buchheit went about creating the AdSense building blocks. Just as he adapted the Usenet search experience to create Gmail, he started working with another tool, a porn filter no less, to create AdSense. This was basically a code editor he had created to screen for adult content. Probably it was used to switch on and switch off Safe Search filters in Google Search Settings. “Normally, the filter examined a batch of known porn pages and listed words that occurred disproportionately within those pages. Other pages containing those words were then assumed to be porn. Buchheit instead turned the filter on Gmail messages, using the resulting keywords to select advertisements from Google’s AdWords database.”

Tate advises youngsters who are following side projects to copy Buchheit’s method of adapting old work. “As tempting as it is start from a clean slate, always look for opportunities to use something old to create something fresh,” Tate advises.

HOW BUCHHEIT WON OVER THE DECISION MAKERS

Although Buchheit directly rebelled against his boss in putting together the delivery mechanism of what turned out to be AdSense, Tate says it helped that Google had a culture where results prevail over preconceptions.

When the next day Marissa Mayer opened her Gmail account, only to see ads running on the side of mails, her immediate instinct was to summon Buchheit for an explanation. But she delayed action, thinking he deserved the mercy of sleeping for a few more hours after having worked the whole night. Tate writes, “While she waited, Mayer checked her Gmail. There was an email from a friend who invited her to go hiking — and next to it, an ad for hiking boots. Another email was about Al Gore coming to speak at Stanford University — and next to it was an ad for books about Al Gore. Just a couple of hours after the system had been invented, Mayer grudgingly admitted to herself, AdWords was already useful, entertaining, and relevant.”

Tate writes that like Mayer, Larry Page and Sergei Brin loved AdSense. “In short order, the Google high command decided AdSense would be a top priority. It was a no-brainer: Google’s main revenue source, AdWords, placed contextual ads alongside search results. But search results were just 95% of Web views; AdSense promised to open up the other 95 percent to ads, since it could go inside any Web page,” Tate writes.

According to Tate, it took just six months for AdSense to launch. In June 2003, it was made available to the public as a widget that any publisher could attach to any Web page. It generates more than $10 billion per year for Google. Gmail itself, for which AdSense was first developed by Buchheit, launched to the public on April 1, 2004, in what was initially thought of as an April Fool’s Day practical joke. Today it’s probably the world’s largest free Webmail service, as well as the pivot around which the Google Apps for Business suite functions.

So what are the lessons which we can take from Buchheit’s innovations in the development of Gmail and AdSense for people who run 20% projects:

- First of all your product should solve an existing itch.

- Then you should have the smartness to secure the backing of powerful decision-makers. So sponsorship is important, especially if you want the company to commit its best resources to what may well turn out to be a long project.

- Also, be ready to back your ideas with data. Buchheit was ready show through his prototypes that his email was actually an improvement over existing ones in the market and also that his advertisement system worked.

- Be ready to adapt old work. Buchheit adapted his learnings from fixing search in Google Groups to create Gmail. He used his code editor to filter porn sites to filter email text to select relevant ads.

- Leverage access to system administrators and computer systems. Buchheit had special access to the databases powering Google AdWords. Without dipping into them, he could never have got the ads to run alongside his Gmail texts and develop AdSense.

- Try snatching resources under the cover of a more established project. Buchheit was supposedly working on the high profile email project. But to make it stand on its feet and fund it, he developed AdSense on the side.

- Finally, you can get away with a little bit of defiance of authority so long as there are results to show for it. Buchheit gave his then boss Marissa Mayer an explicit promise that he would not work on developing AdSense. But he went ahead and did it anyway. But she herself became a backer of AdSense when she realized that it worked.

e.o.m.

RSS Feed

RSS Feed